The words “nuclear physics” tend to conjure images of heavily guarded laboratories or trench-coated spies whispering to each other on park benches and exchanging briefcases full of file folders stamped “Classified: Top Secret.” But despite this reputation for secrecy, today's nuclear scientists embrace openness. And it's paying off.

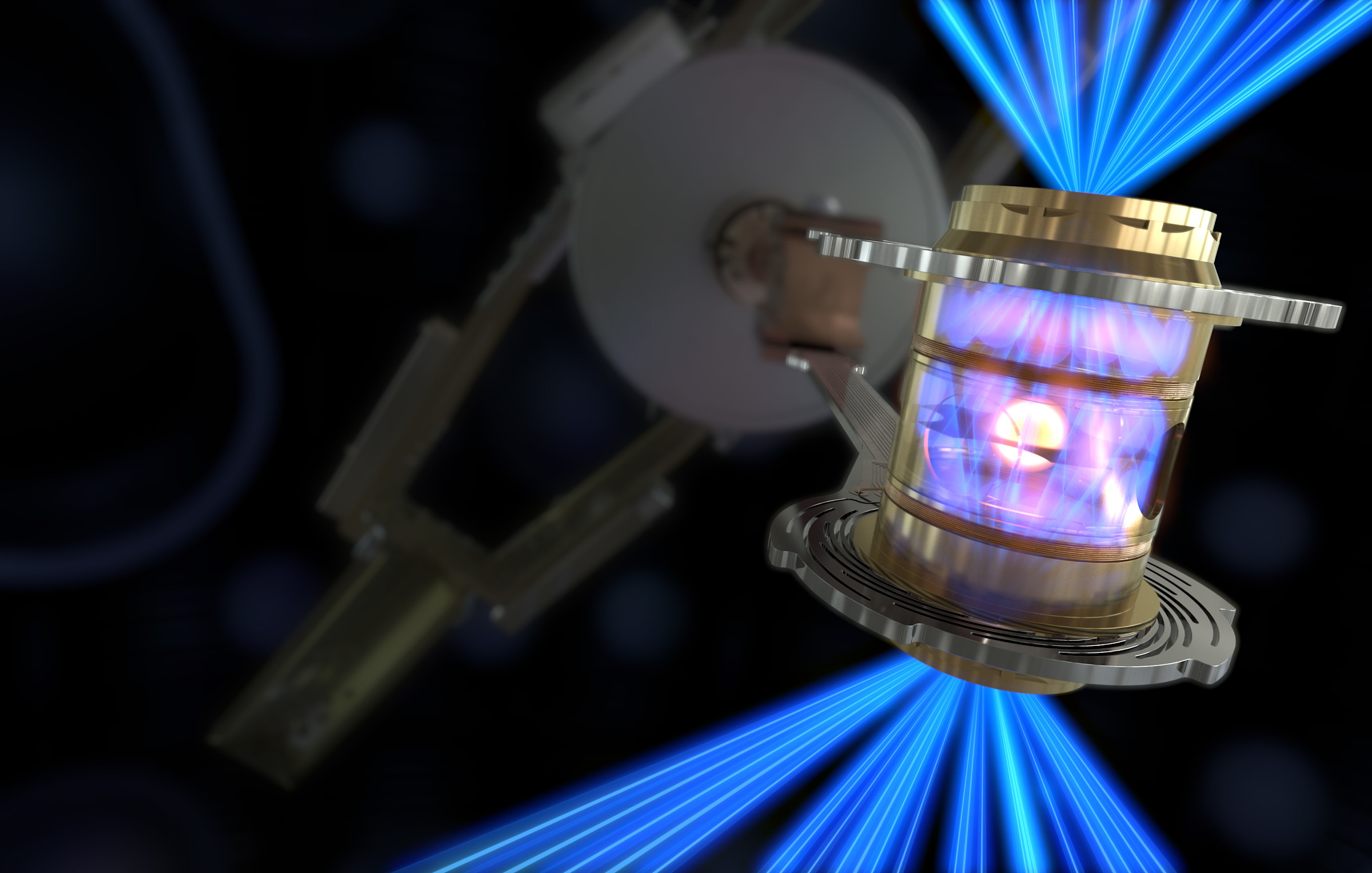

Take December 2022's nuclear fusion breakthrough, for instance. For the first time ever, researchers at the National Ignition Facility (NIF) at the Lawrence Livermore National Laboratory (LLNL) succeeded in creating a controlled nuclear fusion reaction that generated more energy than it took to cause the reaction—an achievement known as "ignition."

Controlled nuclear fusion generates energy by fusing atoms and could be a safer, cleaner alternative to nuclear fission, the method used in modern nuclear power plants, which splits atoms and produces long-lived radioactive waste. Ideally, nuclear fusion could one day provide plentiful, carbon-free energy. But that goal has remained elusive, despite decades of research. Even NIF's breakthrough comes with important caveats: The ignition reaction actually took more energy to produce than it created when you take into account the 300 megajoules it took to charge the lasers used in the experiment. Researchers will not only need to develop more efficient reactions but do so reliably and at power plant–scale. Nonetheless, NIF's breakthrough proved that net-positive power generation is possible, and it paves the way for researchers not just at NIF but at other institutions exploring additional fusion methods as well.

Open source software has played a key role in paving the way for the ignition breakthrough, and will continue to help push the field forward. For example, both fission and fusion experiments are expensive and time-consuming to conduct, so researchers run computer simulations on high-performance computing (HPC) systems—AKA supercomputers—to test ideas before trying them out on real equipment. Researchers at various Department of Energy National Laboratory and Technology Centers, including LLNL, the Idaho National Laboratory, and Argonne National Laboratory, have open sourced a number of tools for running complex physics simulations on HPC systems. These projects are helping push forward not just nuclear physics research and stockpile stewardship, but other scientific fields as well, such as radiology and epidemiology.

Many nuclear science organizations have released open source software in recent years, which is a big change from business as usual in the field. Though CERN, which focuses on fundamental particle physics rather than energy generation, is the birthplace of the web and has long embraced open source, other institutions have historically been less open. "There's a history of secrecy in the field. Most fusion and fission software used to be proprietary," says Paul Romano, the project lead for OpenMCand a computational scientist working in nuclear fusion at Argonne National Laboratory. "But as open source has exploded over the past decade, it plays an increasingly important role in research, both in the public and private sectors."

Source: https://www.llnl.gov/news/national-ignition-facility-achieves-fusion-ignition

Open, but not too open

Despite open source’s many benefits, it took time for the nuclear science field to adopt the open source ethos. Using open source tools was one thing—Python's vast ecosystem of mathematical and scientific computing tools is widely used for data analysis in the field—but releasing open source code was quite another.

When computational scientist Derek Gaston began working on the MOOSEframework at the Idaho National Laboratory in 2008, he fully intended to open source the project. The idea was to make supercomputers more accessible to scientists. MOOSE provides a "full-stack" platform and set of APIs that researchers can use to write simulations without having to worry about the underlying computational mathematics required to utilize HPC hardware effectively. "As a scientist you want your inventions to be used as widely as possible," he says. "I came up during the rise of open source in the late ’90s and early 2000s and benefited greatly from it. Open source was a way to give our scientific output to the world."

Unfortunately, Gaston and his team weren’t able to open source MOOSE until 2014. Although the Department of Energy issued a policy recommendation in 2002 encouraging all of the agency's organizations to release source code unless there were reasons not to, many groups were reluctant, especially in the nuclear science area. "I think we were the first open source project to come out of the Idaho National Lab. No one there had any experience with releasing something like this," Gaston says. "We had to blaze the path for it to exist." Gaston and his colleague Cody Permann, the current manager of the MOOSE project, spent countless hours in meetings to explain the benefits of open source, and how tools like GitHub work. "Some people were worried that the code would be altered maliciously," Gaston says. "We had to explain pull requests and code reviews."

It's easy to see why there would be so much secrecy in nuclear science given the dangers of nuclear weaponry. But secrecy made research and education in nuclear science unnecessarily difficult, particularly in the realm of computing. While much nuclear research was published in academic journals, it was often conducted with software that wasn't widely available, making it more difficult to build upon existing work or interpret data.

We want to hear from you! Join us on GitHub Discussions.

"Oftentimes when you wanted access to code, you needed to send someone an email and then sign an agreement restricting your use of the code to certain things," says Ethan Peterson, a research scientist at MIT Plasma Science and Fusion Center. "At the end of all of that you might get a zip file or, if you were lucky, access to a repository."

The time it took to review requests and ensure compliance with U.S. software export controls made some research prohibitively slow. "Depending on your citizen status, it could take years to get access to code," says April Novak, maintainer of Cardinal, which is based on MOOSE, and a computational scientist at Argonne National Laboratory. "But fast-turnaround research projects don't have time to wait that long. It limits the scope of what you can do."

Plus, since no one person has the expertise in every discipline required, experiments tend to require contributions from multiple researchers with various areas of expertise . That means every researcher working on a project needed the necessary clearance. "The nuclear science field is very international, especially in the area of fusion," says Novak. "So having barriers to access is problematic."

Open source software is free from export controls, so it's far easier to share across international borders. But, to be clear, there's still quite a bit of security around the computational work done at the labs. "We don't open source anything with any capability that could be used for weapons," Novak says. "MOOSE is modular, so you can decouple the closed source parts into separate repositories, and collaborate on the open parts.”

Fusing supercomputing with AI

While Gaston and Permann were lobbying for open source at the Idaho National Laboratory, other labs were working on their own projects. One of the earliest projects open sourced by LLNL was the organization’s own multiphysics computation framework MFEM, first released in 2010. Like MOOSE, MFEM is designed to make it easier for scientists to write simulation code. "Lots of projects at the lab needed the same things: advanced finite element meshing, discretizations and solvers, high-order methods, performance optimizations, parallel scalability, and the ability to take advantage of GPUs," explains Tzanio Kolev, co-creator of MFEM and a computational mathematician at LLNL's Center for Applied Scientific Computing. "MFEM takes care of that and lets you focus on the particular physics problem you need to solve."

Today, MFEM's multiphysics simulations are augmented with an approach to AI/ML that LLNL calls “cognitive simulation (CogSim).” The organization's experiments create far more data than humans can process. But that data can be used to train AI models. Essentially, researchers build a simulation with a select set of parameters, such as the intensity of the lasers. Then they enhance the simulation with the CogSim system, which applies its own models to add more nuance based on the large data sets collected in previous experiments. With that model, they can then make predictions about the likelihood of success for different experiments. CogSim gave 2022's ignition breakthrough a slightly greater than 50% chance of success. That might not sound like great odds, explains J. Luc Peterson, a physicist at LLNL, but it was far better than previous experiments, which had only a 17% chance of success. The data collected from each experiment is then fed back into the system to make future simulations and predictions more accurate.

The CogSim platform is expected to play a bigger role in NIF's research in the future. "We're exploring the use of CogSim in the design process," Peterson says. "It can free our minds, let our human biases get out of the way." For example, the NIF ignition breakthrough involved a tiny, spherical, diamond capsule that contained the two fusion fuels. An earlier CogSim study found that an experiment using an egg-shaped (instead of spherical) implosion might be more resilient to imperfections in the diamond. NIF is also experimenting with using CogSim to guide experiments by analyzing data in real time and suggesting adjustments on the fly.

Like other labs, LLNL still keeps some of its work proprietary, including the code behind these AI simulations. But in 2019, the organization did open source Merlin, a tool built to manage AI workflows and fill the gap left by other modern machine learning tools, which didn't necessarily translate well to HPC systems.

"The fundamental tension is that HPC systems were designed to run huge jobs, like gigantic 3D multiphysics simulations," says Peterson. "But in machine learning, you don't want one big job, you want lots of smaller ones." The NIF team needs to run millions of simulations, but HPC batch job schedulers simply weren't equipped to handle that many jobs without getting bogged down. Peterson's team built Merlin upon existing open source projects like Beanstalk, Celery,Redis, RabbitMQ, and LLNL's own Maestro, to solve exactly that problem. "Without Merlin, you'd spend more time managing jobs than doing simulations," he says.

Not just for nuclear scientists

Many of these projects that started with nuclear science in mind are applicable to just about any field that benefits from using supercomputers. MFEM, for example, is also used in LLNL's cardiac simulation toolkit Cardioid, its crystal plasticity application ExaConstit, and its thermomechanical simulation code Serac. It is also heavily used by the broader scientific community, including industry and academia, in applications such as MRI research at Harvard Medical School, and quantum computing hardware simulationat Amazon. MOOSE is widely used outside of the nuclear field, with applications in areas such as groundwater modeling and other geoscience use cases. During the early days of the Covid-19 pandemic, researchers at LLNL used Merlin to anticipate outbreaks and Maestro for antibody modeling.

Open source also paves the way for anyone, regardless of their scientific background, to pitch in and help push science forward. Many of these projects can benefit from experienced software engineers, as software engineering isn't always a strength for scientific researchers. "The scientific community is trying to learn best software development practices, but there's a lot to learn from professional developers," says MIT’s Peterson. "There are lots of opportunities for experienced developers to help build CI/CD pipelines, write unit tests, and generally help create higher-quality codebases."

Contributing to a project's upstream dependencies is another way to help out, LLNL’s Peterson says. Merlin, for example, relies heavily on the Python-based distributed task queue Celery. And, like practically all open source projects, these need help with documentation and bug reports. "We can never get enough documentation, and not all of it needs to be written by specialists," Gaston says.

Opening repositories to specialists and non-specialists alike for collaboration is a far cry from the cloak-and-dagger image that the nuclear physics field cultivated over the decades. But it’s a big part of what pushes science forward as creators gain valuable contributions from outside their own organizations. “We have hundreds of contributors at this point,” says Permann, the manager of the MOOSE project. “It’s not just the National Labs and universities either. Private companies are a little more cautious about what they contribute to open source, but they help out as well and there’s a mutual benefit.”

It also encourages the teams to write better code in the first place. "We treat every pull request like it's a submission to an academic journal," Kolev says. "When you know your code is going to be scrutinized by other people, it sets a higher bar."

Though secrecy still has its place, it takes openness to keep moving forward.